Assemble, combine, compile, translate 12 discerned and randomly gathered objects by one of your peers into a single rigorous and comprehensive narrative

This is a brief for a short project we worked on. It was my first attempt to experiment with ML. The project is broken down in 3 'small' experiments:

- 01 - Objects-Viewer

- 02 - Image “Paintingâ€

- 03 - Trained convnet (App)

01 - Objects-Viewer

OFX app based on the ConvnetViewer from ml4a-ofx package. I've added a real time object detection. The objects are detected by the app then the different stages of the process are visible on the left hand side.

02 - Image "Painting"

Those visualisations are rendered using a package called CatsEyes and the Convnet JS framework from Andrej Karpathy

03 - Trained convnet (App)

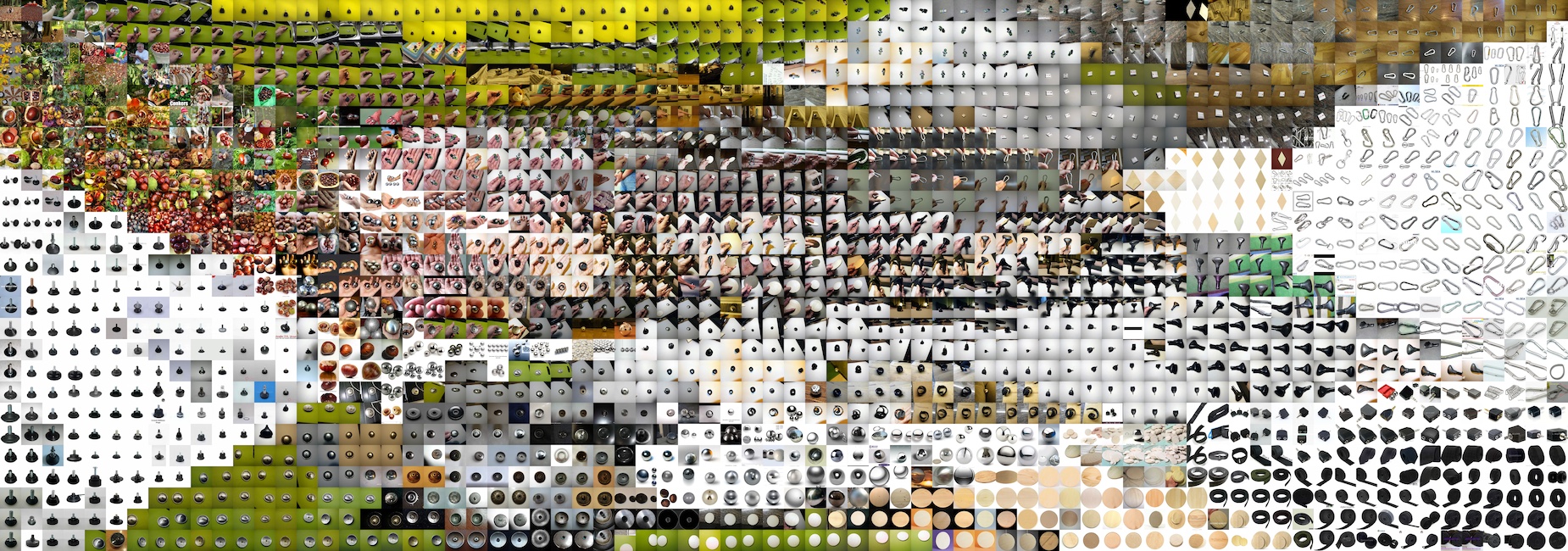

This part use Tensorflow. I've basically followed this guide and trained a model on top of Google Inception on my 12 objects. I then included the model into an iOS app which can recognise the objects. The results are quite impressive, even an object which is nearly completely masked is still accurately detected. You can view a video of the app in action here

Preview of the dataset used to train the model. (hd version)